Contents

Generative AI is evolving fast, but its economic impact depends on how quickly and widely it’s adopted. After ChatGPT’s debut, the initial excitement has given way to a more measured outlook as businesses weigh its costs, limitations, and risks. While innovation in areas like agentic AI and multimodal models continues, 2025 is shaping up to be a year of both progress and challenges. Companies now demand real-world results, not just experiments. High costs, errors, and risks of misuse make adoption tricky. To succeed, AI must be reliable, accurate, and cost-effective. As part of broader Machine Learning Trends, businesses are focusing on making AI more practical and scalable. Regulators also face the tough job of balancing innovation with safety, tackling issues like data privacy, bias, and AI’s impact on jobs. As AI keeps advancing, smart policies will be key to harnessing its potential while minimizing risks.

Top AI trends to Anticipate in 2025

Practical approaches are replacing hype

Interest in generative AI surged in 2022, driving rapid innovation. However, adoption has been uneven, with many companies struggling to move from experimental projects to full-scale implementation. Both internal productivity tools and customer-facing applications face obstacles in transitioning from pilot phases to real-world use. Consequently, highlighting a gap between innovation and practical integration. While many businesses have explored generative AI through proofs of concept, few have fully embedded it into their operations. A September 2024 report by Informa TechTarget’s Enterprise Strategy Group found that although over 90% of organizations increased their AI use in the past year, only 8% considered their initiatives mature.

Generative AI Expands Beyond Chatbots

When people think of generative AI, they often picture tools like ChatGPT and Claude powered by LLMs. Businesses have largely explored AI through chat-based interfaces, integrating LLMs into their products and services. However, as AI is evolving, the focus is shifting beyond chatbots. By 2025, AI development is moving away from text-based interactions. The future lies in multimodal models like OpenAI’s text-to-video Sora and ElevenLabs’ AI voice generator, which can process audio, video, and images, expanding AI’s capabilities beyond text.

AI Agents are the Next Frontier

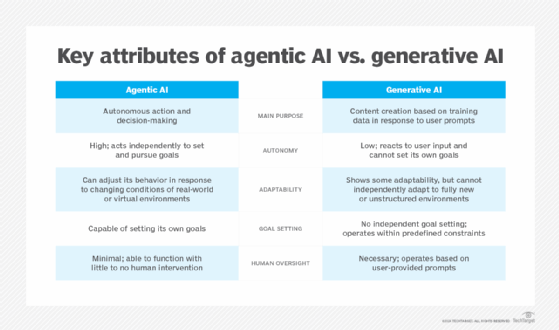

By late 2024, agentic AI has gained momentum. It promises smarter automation with independent decision-making. Tools like Salesforce’s Agentforce are stepping beyond basic automation. It is capable of handling workflows, scheduling, and data analysis with minimal human input. While still in its early days, agentic AI stands out for its adaptability. Unlike traditional automation, these AI-driven agents can learn, adjust in real time, and navigate unexpected challenges. This makes them a breakthrough technology across industries. But with greater autonomy comes greater risk. AI errors in scheduling, data analysis, or decision-making could have real-world consequences. Since generative AI is known to produce misinformation, strict safeguards are crucial; especially in high-stakes applications where AI can directly impact people’s lives.

Generative AI Models Will Become Commoditized

The world of generative AI is moving fast, and foundation models are becoming the norm. The real competitive edge now lies in how businesses fine-tune these pretrained models or build specialized tools on top of them, rather than just having the best model. Analyst Benedict Evans recently compared the AI boom to the PC industry of the late 1980s and 1990s. Back then, performance was measured by specs like CPU speed, much like today’s AI models are judged by technical benchmarks. But over time, these distinctions mattered less, and factors like cost, user experience, and ease of use became key. We’re seeing a similar shift with foundation models: performance is converging, and many advanced models are now interchangeable for most applications.

In a commoditized model landscape, the focus is shifting from technical specs to usability, trust, and how well AI integrates with existing systems. Companies with strong ecosystems, easy-to-use tools, and competitive pricing are likely to lead the way.

AI Applications and Datasets Will Become Increasingly Domain-specific

Prominent AI labs like OpenAI and Anthropic are working towards artificial general intelligence (AGI), which aims to perform any human task. However, AGI, or even the more limited foundation models, is not necessary for most business applications.

From the start of the generative AI trend, businesses have shown interest in narrow, customized models that address specific needs. These tailored applications don’t require the versatility of a chatbot meant for consumers. While general-purpose AI models are widely discussed, businesses should consider how to use them responsibly, focusing on the potential risks. Instead of just focusing on the technology, companies should evaluate who the end users are, what the specific use case is, and where it will be applied.

While larger datasets have historically improved model performance, there’s growing debate about whether this trend can continue. Some experts argue that adding more data may actually slow down or even harm performance in certain cases. In their paper “Scaling Laws Do Not Scale,” researchers Fernando Diaz and Michael Madaio suggest that the assumption that bigger datasets always lead to better results may be flawed, especially for certain individuals or communities.

AI Literacy Becomes Essential

As Generative AI grows, AI literacy is becoming essential for everyone in a business. It’s important to understand how to use AI tools, evaluate their results, and manage their limits. While AI talent is in demand, you don’t need to know how to code or train models to be AI-literate. Experimenting and using the tools are enough to get started. Despite the buzz around generative AI, many people are still not using it regularly. A 2024 study found that less than half of Americans use generative AI, and only a quarter use it at work. While AI adoption is growing fast, it hasn’t yet reached the majority. Businesses may support AI, but its actual use by employees is still limited.

To address this, universities are offering skill-based education that’s flexible and useful across different roles. In the ever-evolving business environment, it’s unrealistic to take time off for a degree. Instead, modular, real-time learning is needed to keep up.

Research from Expereo and IDC indicates that skills shortages are preventing companies from achieving their artificial intelligence (AI) objectives. The pervasiveness of Generative AI has made AI literacy a sought-after skill for individuals across all levels of an organization, from executives to developers to general staff. This entails comprehending how to utilize these tools, evaluate their outputs, and, most importantly, manage their constraints.

Find Your Perfect Software Outsourcing Partner

Unlock a world of trusted software outsourcing companies and elevate your business operations seamlessly.

Discover CompaniesBusinesses Adjust to an Evolving Regulatory Environment

Throughout 2024, companies were challenged by a fragmented and rapidly evolving regulatory landscape. While the EU established new compliance standards with the passage of the AI Act in 2024, the U.S. remains comparatively unregulated – a trend likely to persist in 2025 under the Trump administration. While a light-touch regulatory approach may encourage AI development and innovation, the accompanying lack of accountability raises concerns regarding safety and equity. To minimize harm without stifling innovation, some stakeholders prefer regulations that respond to the risk level of a specific AI application. Under a tiered risk framework, low-risk AI applications can be expedited to market, while high-risk AI applications undergo a more thorough process.

However, minimal oversight in the U.S. does not mean that companies will operate in an entirely unregulated environment. Without a cohesive global standard, large incumbents operating in multiple regions typically adhere to the most stringent regulations. In this way, the EU’s AI Act could function similarly to GDPR, setting de facto standards for companies building or deploying AI worldwide.

Security Concerns Related to AI are Escalating

Generative AI is becoming more accessible, often for free or at a low cost, giving cybercriminals powerful tools to launch cyberattacks. This threat is expected to grow in 2025 as multimodal models become more advanced and easier to obtain. The FBI recently warned that cybercriminals are using AI to run phishing scams and commit financial fraud. For example, an attacker can create a fake social media profile, write a convincing biography and messages with a large language model, and use AI-generated images to make the fake identity seem real.

AI-generated video and audio also present a growing threat. In the past, AI videos and voices had clear flaws, like robotic sounds or glitchy video. But today’s AI tools are much better, especially when a victim is distracted or in a hurry. Audio generators can now imitate someone’s trusted contacts, like a spouse or colleague. While video generation is still more expensive and prone to errors, deepfake technology has already been used in scams. In one major incident in 2024, scammers impersonated a company’s CFO in a video call, tricking an employee into transferring $25 million to fraudulent accounts.

AI models also have their own vulnerabilities. Hackers can manipulate models through techniques like adversarial machine learning or data poisoning. In such cases, hackers intentionally input corrupted training data. To address these risks, businesses should incorporate AI security as a fundamental component of their overall cybersecurity strategies.

Conclusion

The generative AI landscape in 2025 is marked by both progress and challenges. Businesses are shifting from the initial hype to focusing on practical, reliable, and cost-effective implementations. The growth of multimodal models signals a maturing industry looking for real value, though concerns around regulation, security threats, and AI literacy persist. As foundational models become more accessible and specialized applications rise, companies must prioritize AI literacy and bolster security measures. The future of AI hinges on balancing innovation with responsibility to ensure sustainable adoption that benefits both businesses and society.